ZipCrit on AR

Review on Google Translate Augmented Reality

Type

- Individual Work

- App Critique

Duration

- 30 minutes

My Role

- Critical User

What I Did

- Design Guide Study

- In-App Exploration

- App Critique

Project Background

Get Familiar with AR Design Guidelines

As part of the Introduction to AR/VR Application Design course, I was challenged to conduct a quick critique on an AR/VR interface, object or design. To make my critique more solid, I decideed to base my critique on the Google Augmented Reality (AR) Design Guidelines.

Critique Overview

Affordance is Key

I picked the Camera feature in Google Translate as my target of critique because I saw several Youtube videos filmed by Youtube creators during Google I/O 2018 on their experience with it when they went to a restaurant with menus in Japanese and successfully used the Camera feature to translate it into grammatically correct English.

Based on my experience with Google Translate, it works well for volcabulary translation but not so well for longer contents. Since I had't used it for some while, plus it seemed to work well in the Youtube videos, I was excited to test it out with English-Chinese translation myself.

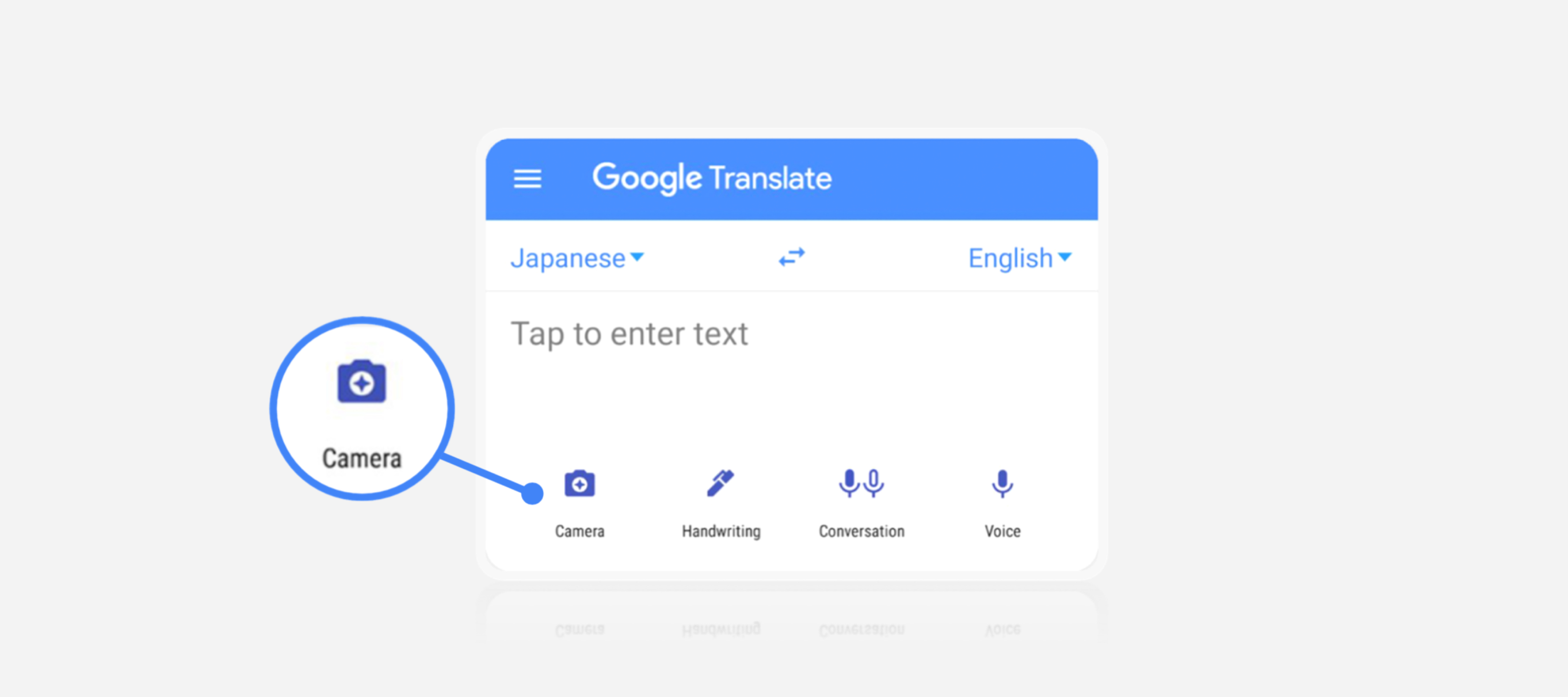

Camera feature in Google Translate app

Feature Overview

I downloaded the Traditional Chinese language pack before I moved on to test the Camera feature.

Offline Mode

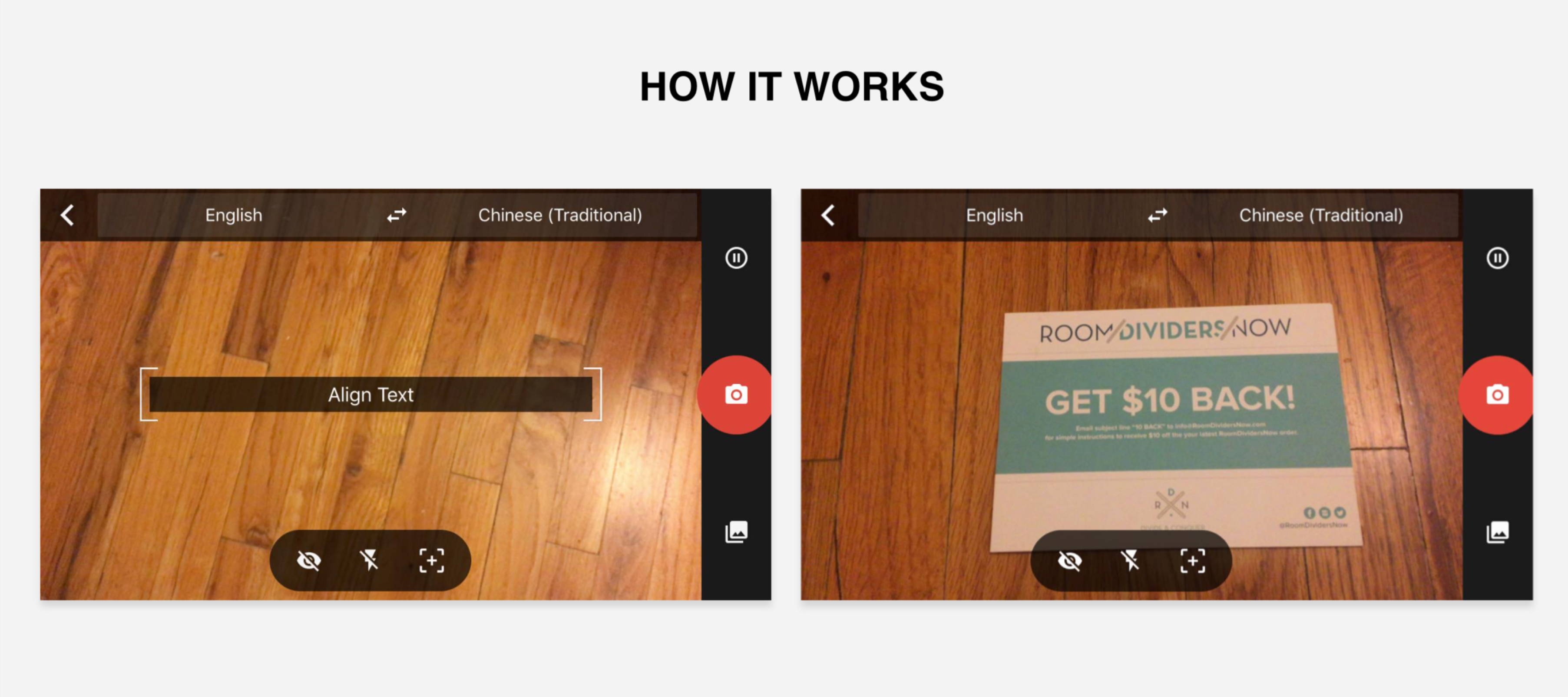

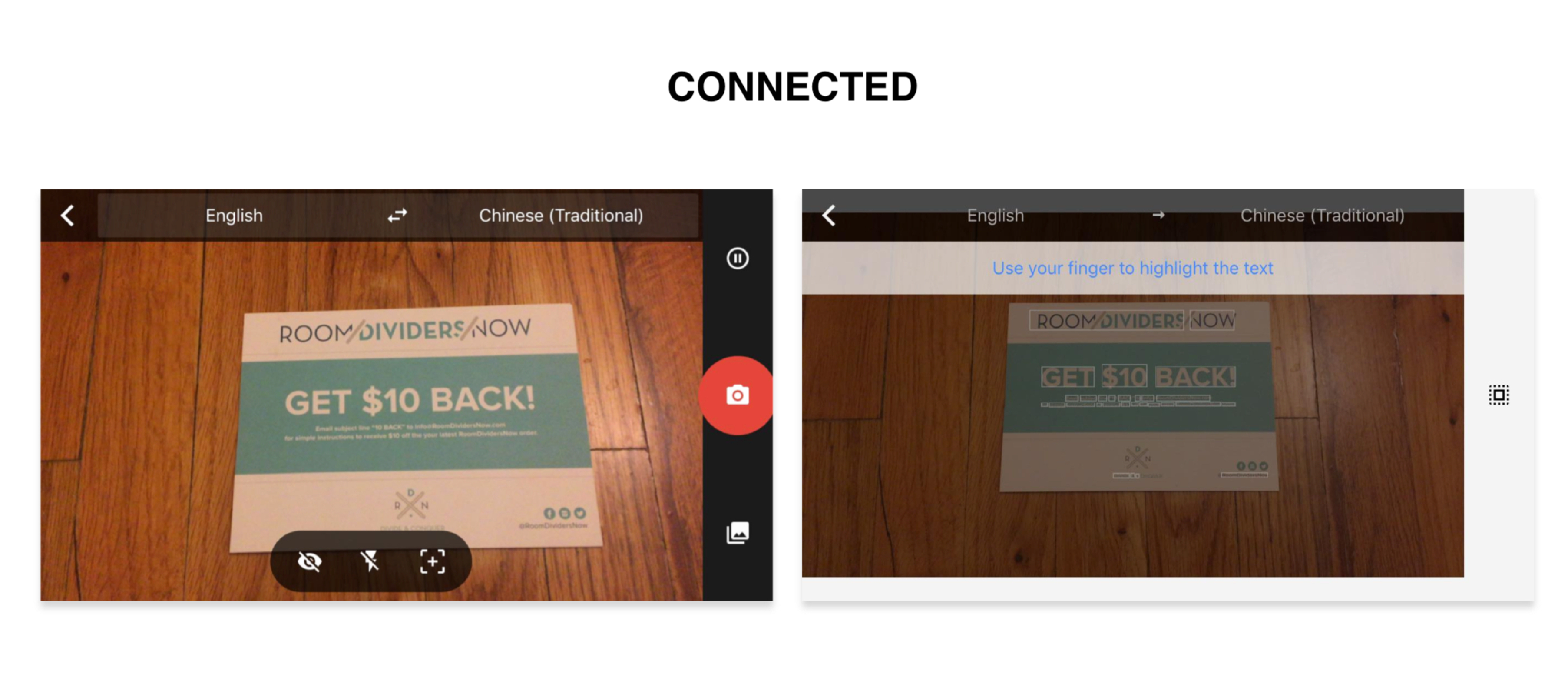

After I open the Camera, I followed the instructions in the center of the view to align text in front of my camera. The target for translation is a commercial flyer written in English. The Alight Text box disappears after text is detected in the view.

Screenshots of Camera in Google Translate app

AR Translation

To see the translation, I click on the instant on (eye icon) button. The status bar turns green when the translation shows up. At the same time, the translation is augmented on to the physical object (the flyer in this case).

Turn on Instant On to see AR translation

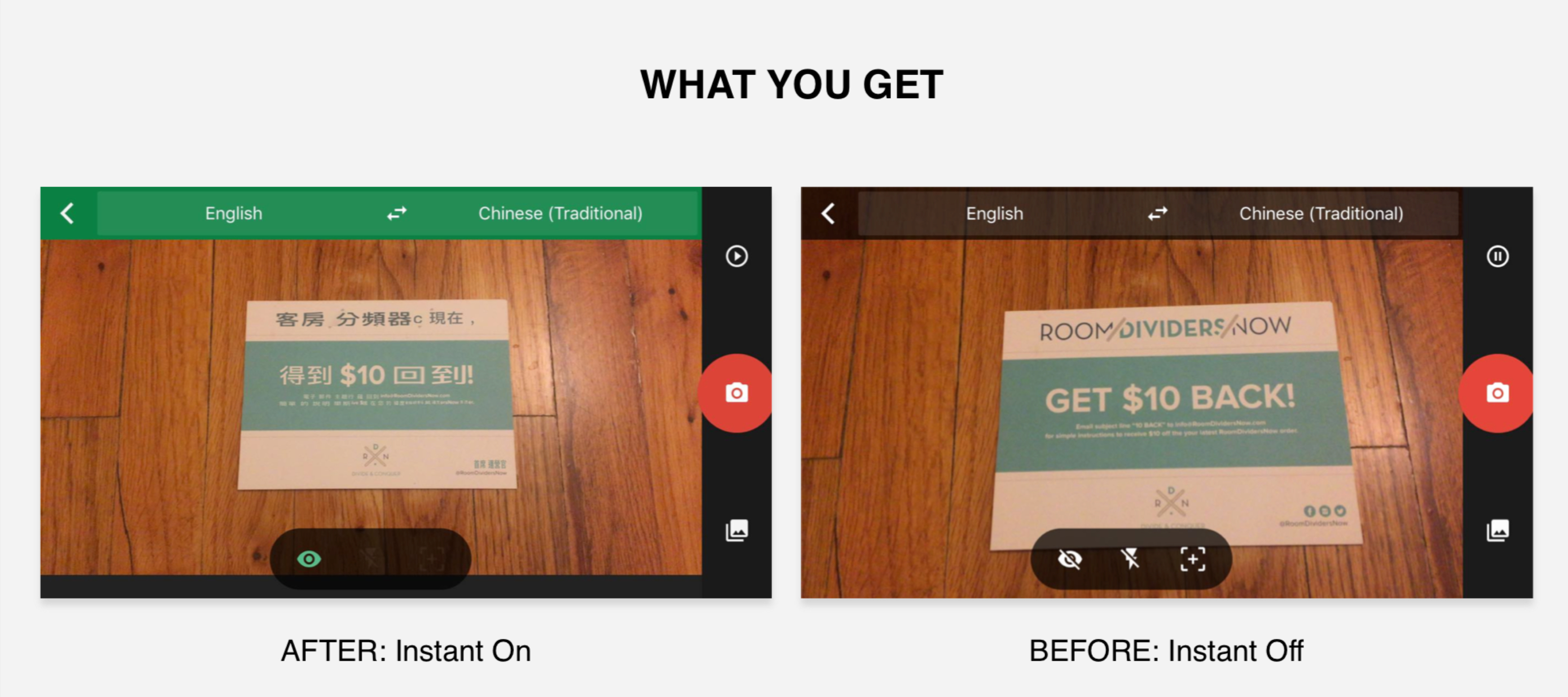

Accuracy & Dection

My phone camera is constantly detecting the size, color and content of the flyer, which is what leads to the 2 different versions of translation below: The content of the flyer is trunked into several word units, which is what the translation is based on. Hence, the translation might be correct on the word level but not on the phrase/sentence level. Also, the dectection still has room for improvement as $10 was detected as "羅 (Lo)", a common last name in Chinese, in the translation on the right. To stop the camera from constant dection, click on the pause button on the upper right.

Translation based on camera dection

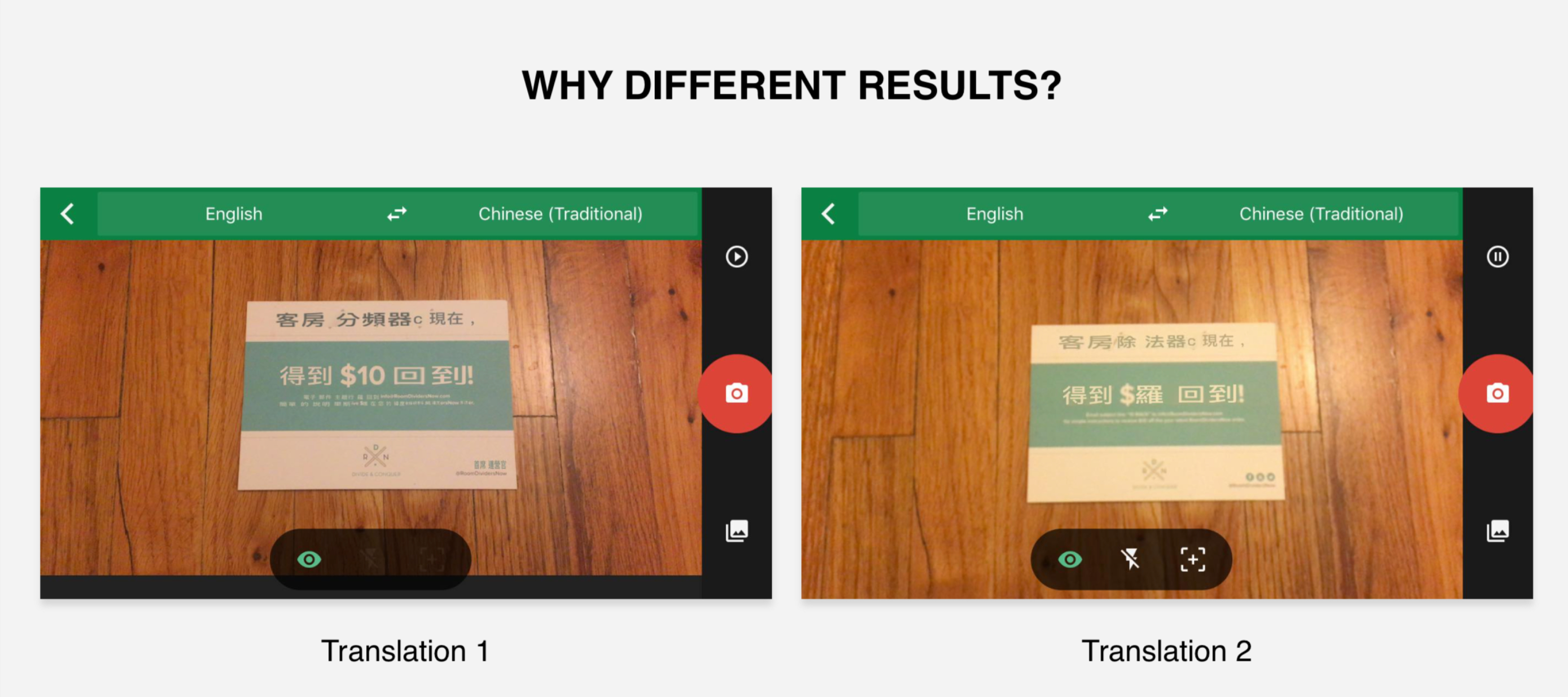

Online Mode

To translate partial content, we need to first take a picture of the target using the red button on the right. Then, follow the instructions on the status bar to highlight the target with finger or click on the square icon on the right to select all. This feature is only available when the device is connected to the Internet.

Features available under online mode

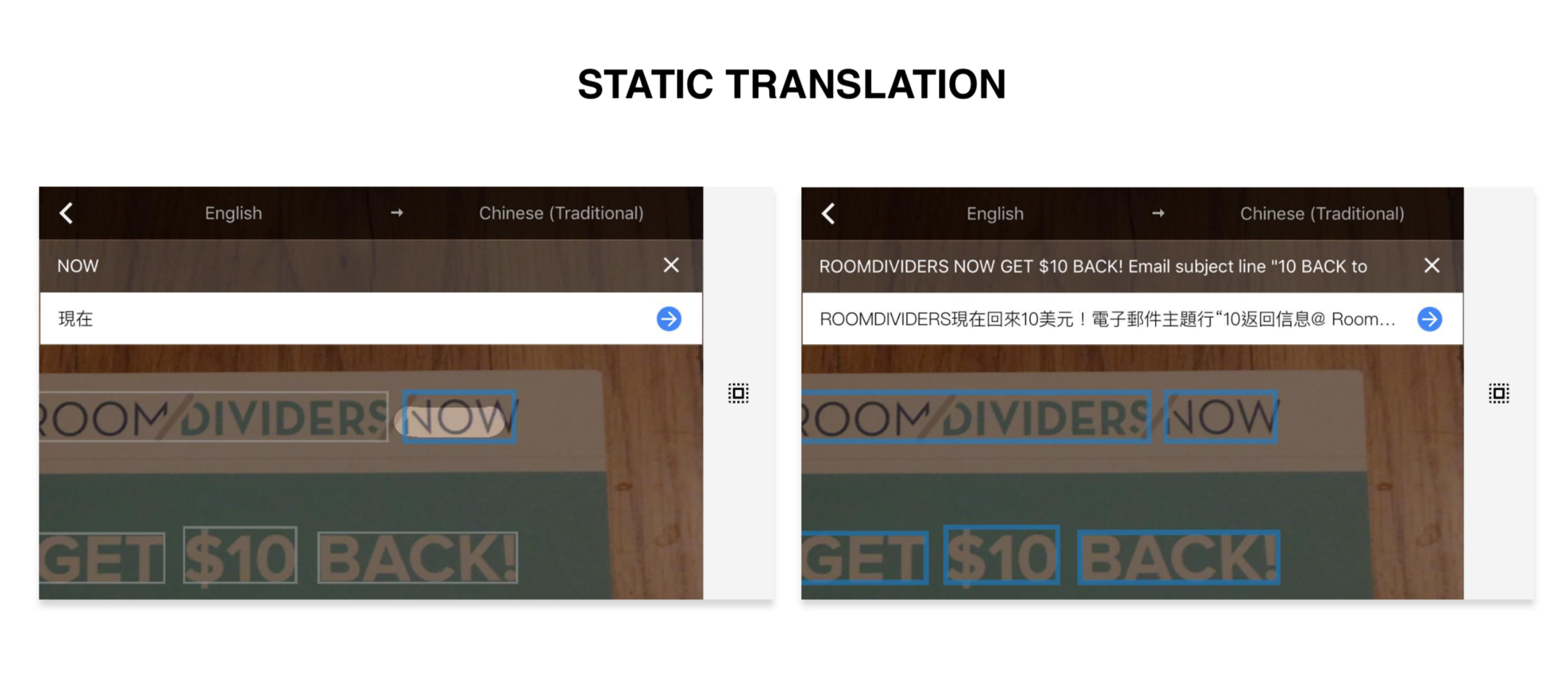

Static Translation

The partial translation isn't completely customiable as the content would be trunked into several units but not all units includes only one word. As for select-all translation, the translation can be exported and viewed on the homepage.

Partial (left) and Select-All translation (right)

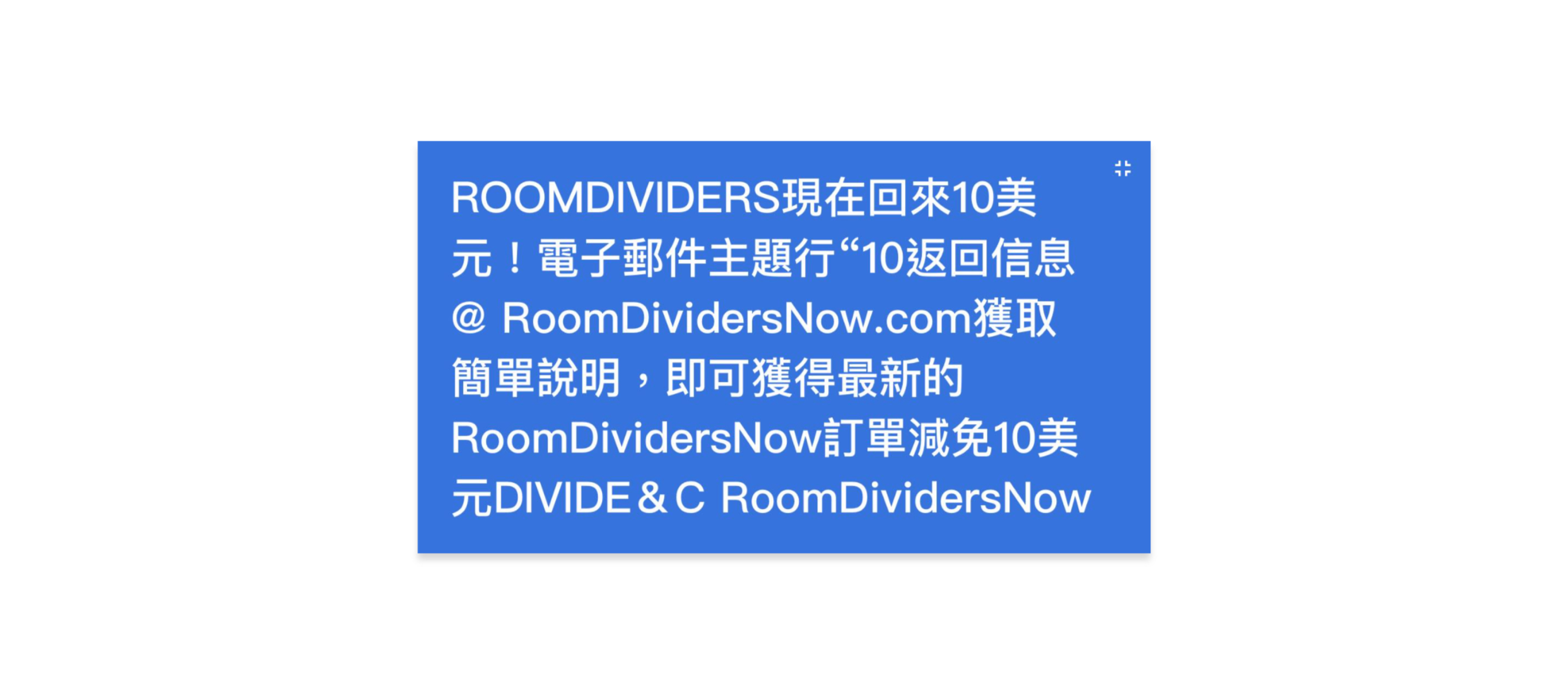

Translation Exported from Select-All result

Critique

After trying out the features myself, the critique is conducted based on Google AR Design Guidelines and summarized into 3 pros and cons:

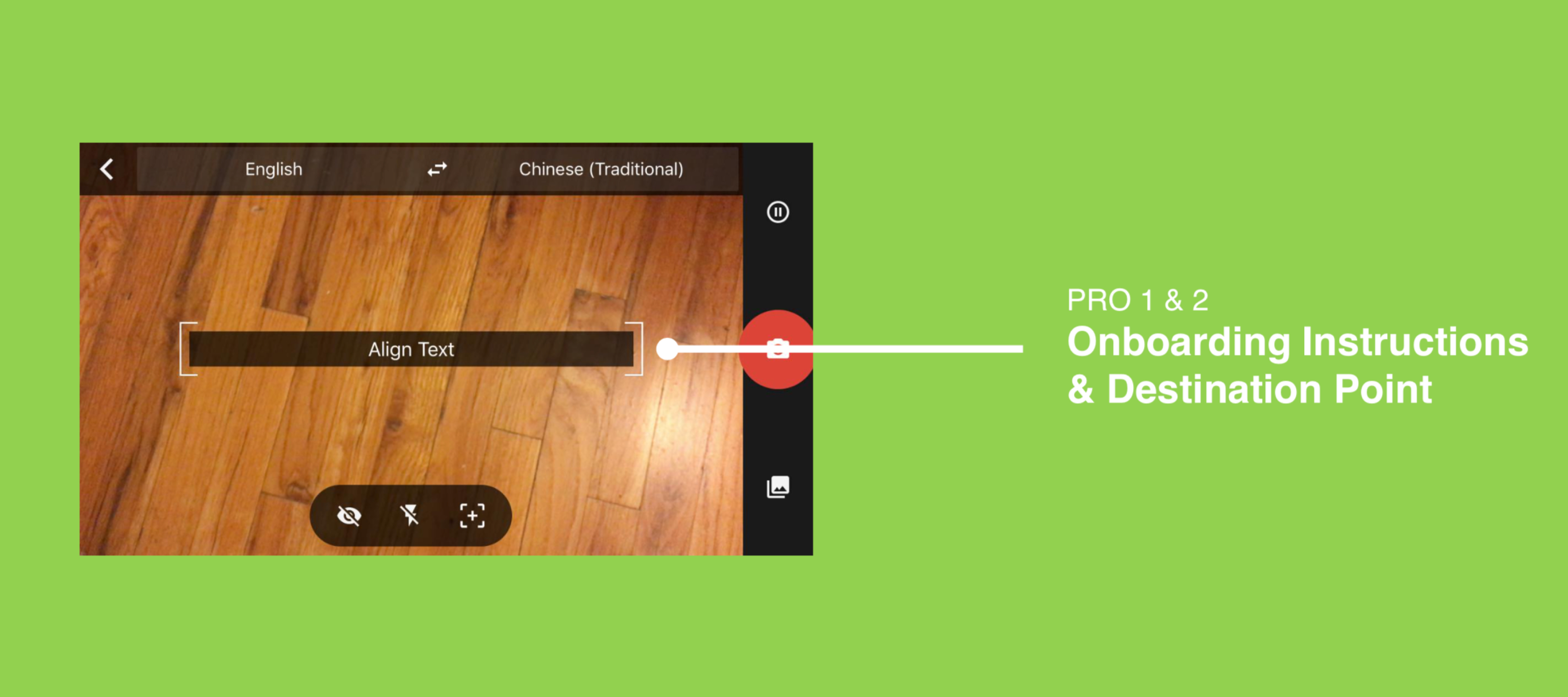

Pro 1: Onboarding Instructions

The "Align Text" box serves as a contextual instruction on where and how to start as part of the interactions on the screen when users enter the camera view

Pro 2: Destination Point

The shaded "Align Text" box helps users envision where the augmented translation would be placed after they turn on the Instant-On feature (the eye icon)

"Align Text" box provides onboarding instructions and serves as a destination point

Pro 3: Manual Placement

Users have control over when the AR translation would appear. The translation is augmented as soon as the Instant-On is turned on. (Manual placement is more suitable for instant augmentation while auto placement is for augmentation that would take some while to render.)

Google Translate AR uses manual placement for instant placement of the augmented translation

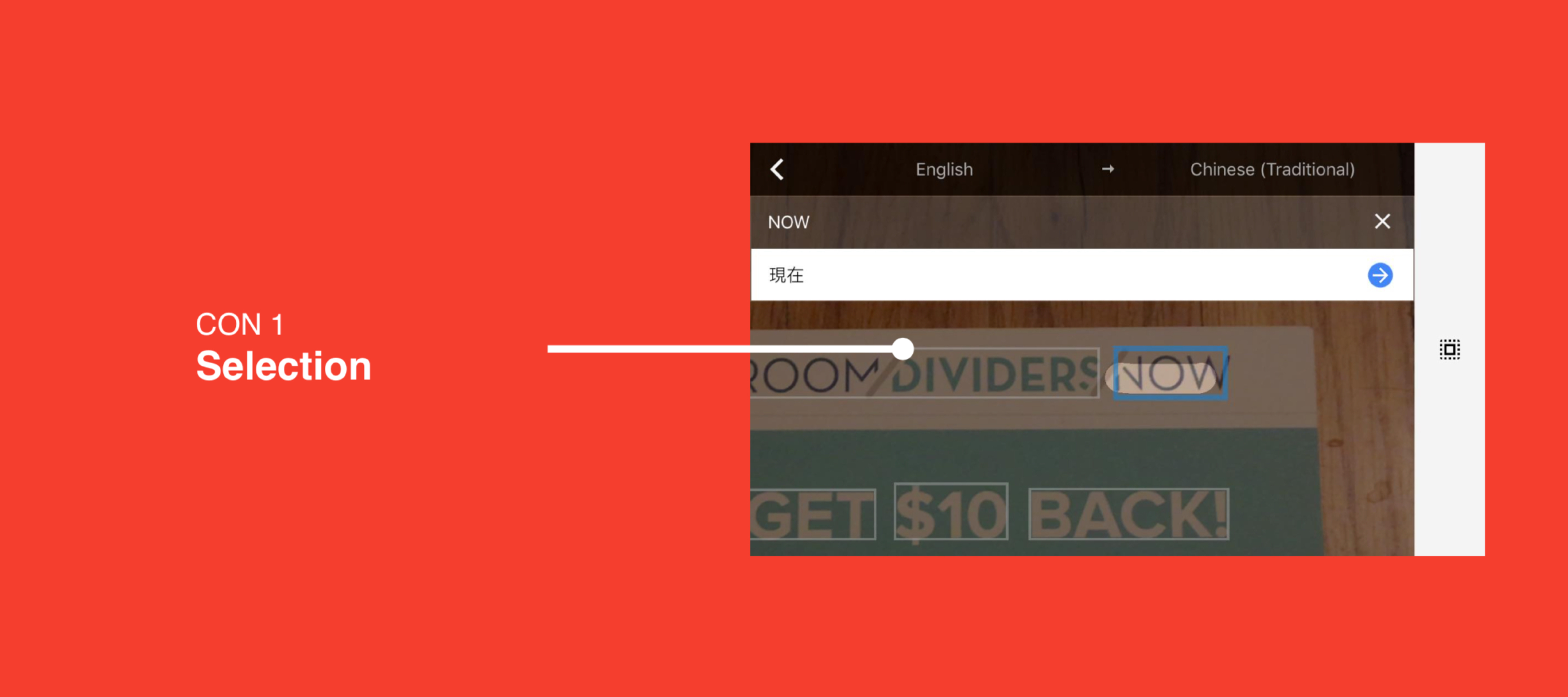

Con 1: Selection

Even though it allows for word-level translation by clicking on/highlighting the word with finger, given the size of screen and content, using a finger to highlight isn't convenient as it will block the content/word itself. Also, the selection of content is bounded to the trunked unit defined/sensored by Google Translate.

Selection using finger blocks the view of the content itself and is limited by the pre-defined translation unit.

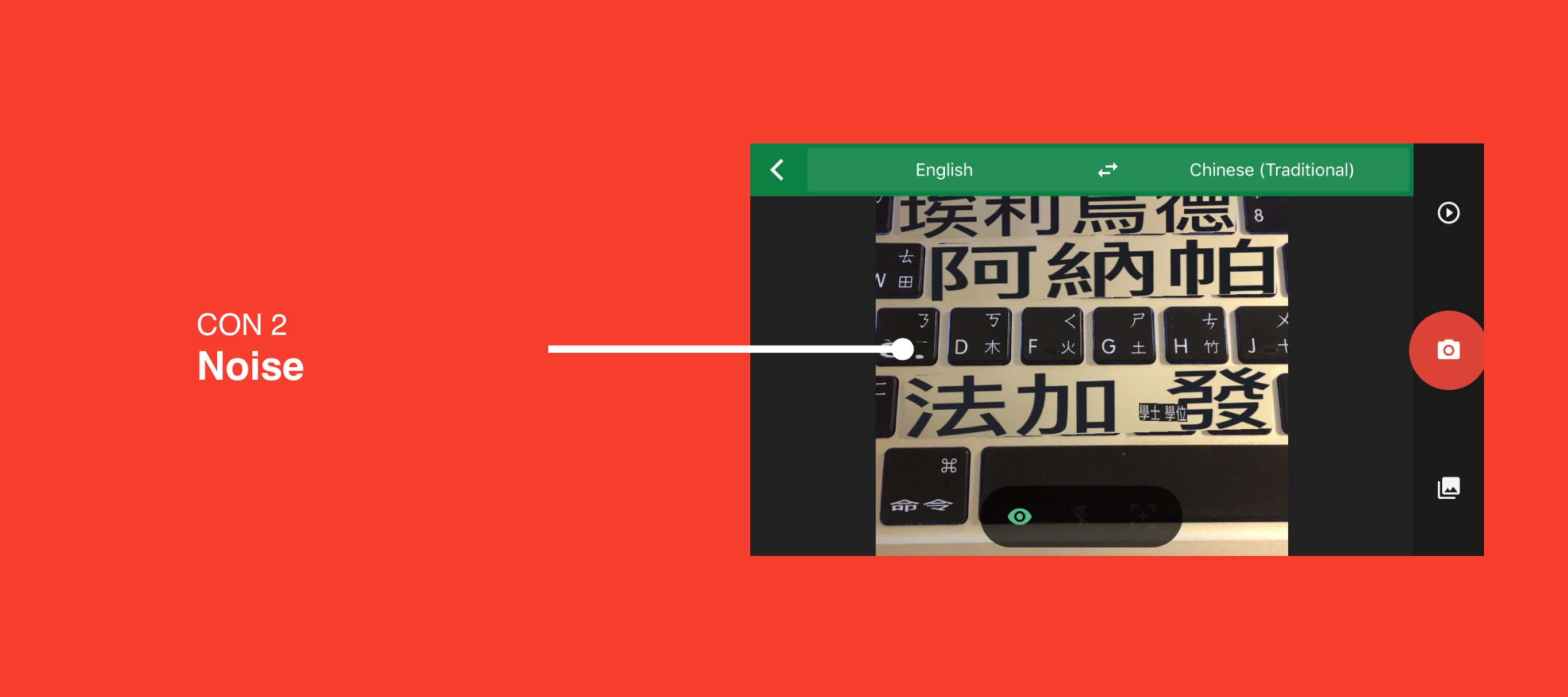

Con 2: Noise

The sensor is subject to a lot of noise as it cannot tell the Taiwanese phonetic symbol on my keyboard but still tried to match it to similar Traditional Chinese characters which makes no sense.

It's subject to noise when it cannot precisely tell what the content is.

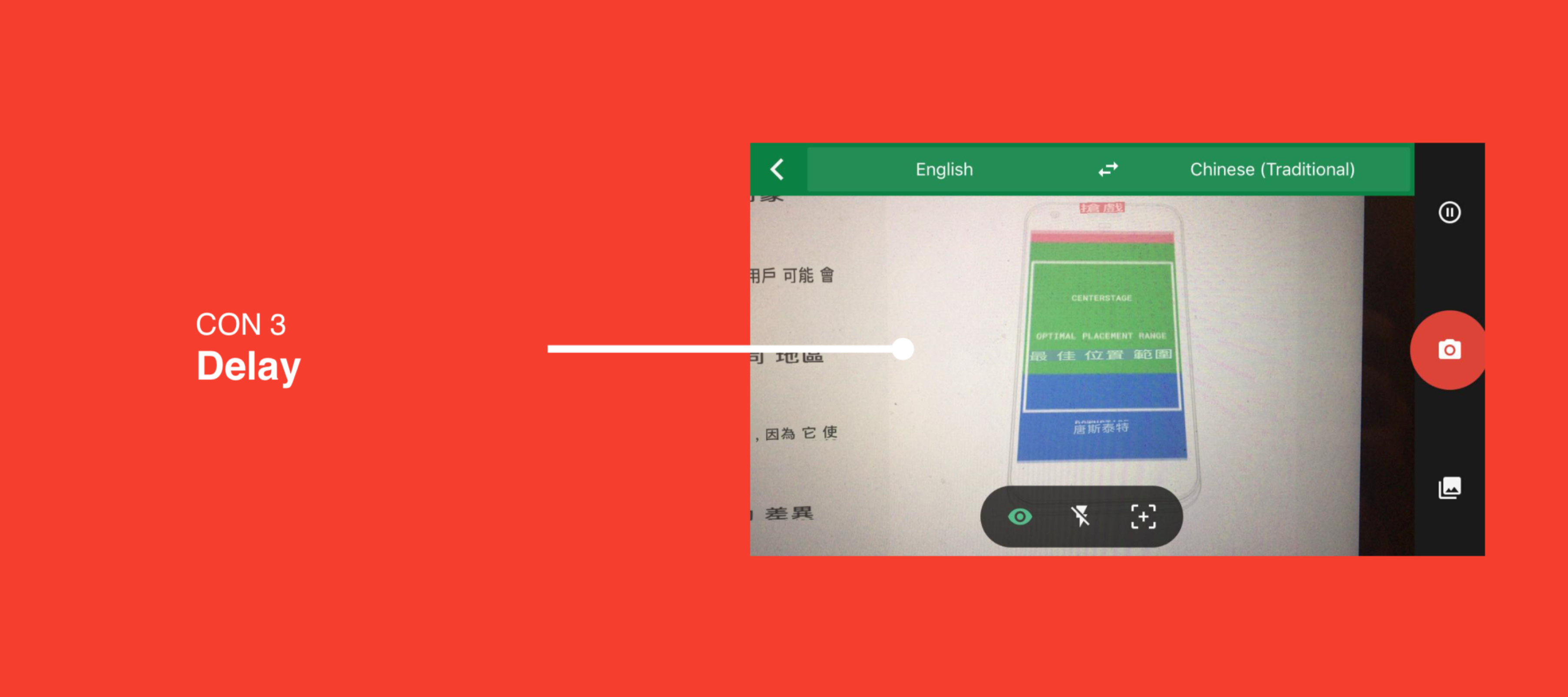

Con 3: Delay

There would be a delay in translation augmentation when the subject to be translated isn't static.

Delay caused by content movement

Social Impact

While Googel Translate AR doesn't have a good affordance at the sentence or paragraph level, it still brings convenience to travel purposes as it allows for instant offline translation and by generating different versions of translation, allowing users to capture an approximate meaning of the word.